This repository has been archived on 2025-03-30 . You can view files and clone it. You cannot open issues or pull requests or push a commit.

main

All checks were successful

docker build with git tag / build (push) Successful in 22m16s

ollama-intel-gpu

This repo illlustrates the use of Ollama with support for Intel ARC GPU based via SYCL. Run the recently released Meta llama3.1 or Microsoft phi3 models on your local Intel ARC GPU based PC using Linux or Windows WSL2.

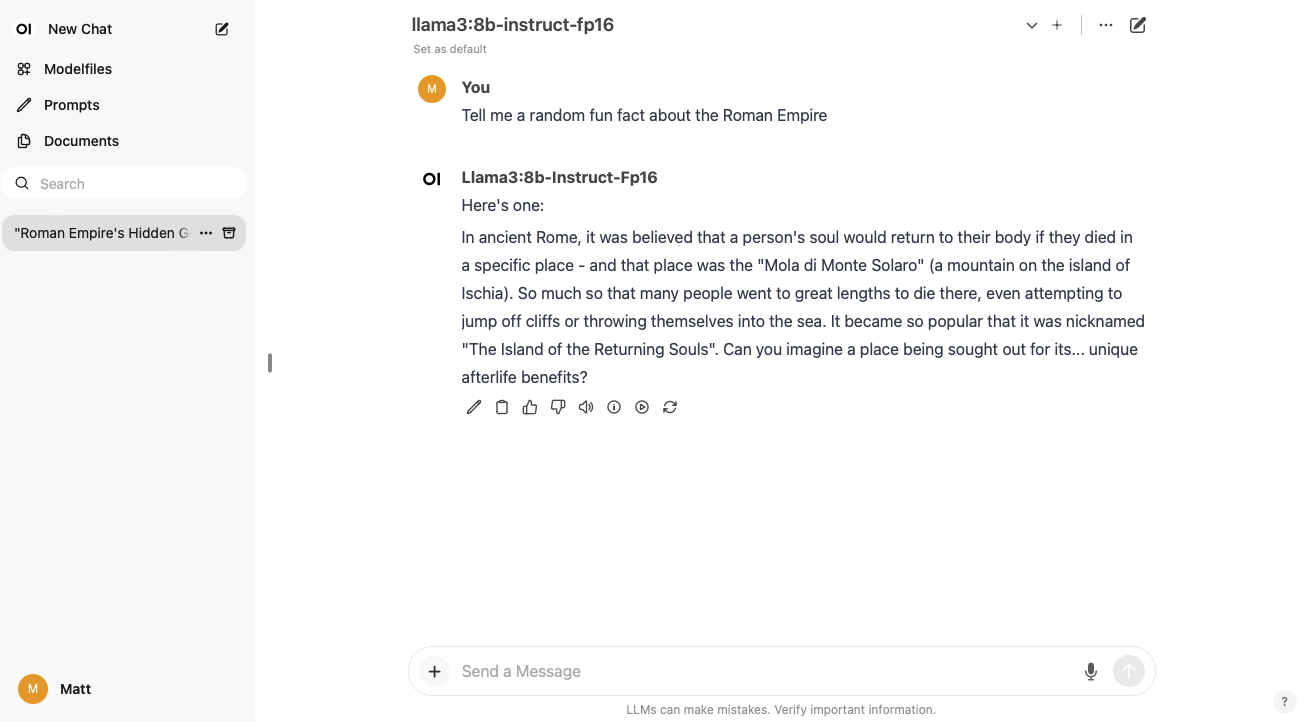

Screenshot

Prerequisites

- Ubuntu 24.04 or newer (for Intel ARC GPU kernel driver support. Tested with Ubuntu 24.04), or Windows 11 with WSL2 (graphics driver 101.5445 or newer)

- Installed Docker and Docker-compose tools (for Linux) or Docker Desktop (for Windows)

- Intel ARC series GPU (tested with Intel ARC A770 16GB and Intel(R) Core(TM) Ultra 5 125H integrated GPU)

Usage

The following will build the Ollama with Intel ARC GPU support, and compose those with the public docker image based on OpenWEB UI from https://github.com/open-webui/open-webui

Linux:

$ git clone https://github.com/mattcurf/ollama-intel-gpu

$ cd ollama-intel-gpu

$ docker compose up

Windows WSL2:

$ git clone https://github.com/mattcurf/ollama-intel-gpu

$ cd ollama-intel-gpu

$ docker-compose -f docker-compose-wsl2.yml up

Then launch your web browser to http://localhost:3000 to launch the web ui. Create a local OpenWeb UI credential, then click the settings icon in the top right of the screen, then select 'Models', then click 'Show', then download a model like 'llama3.1:8b-instruct-q8_0' for Intel ARC A770 16GB VRAM

References

Description

Languages

Dockerfile

100%